Change Tracking at DynamoDB with CloudTrail Data Events

Get notified on manual changes of or access to production data

Table of contents

As a DevOps engineer, it’s complicated to avoid having almighty administrator access to your AWS production account, because you are responsible for everything.

In general, this is not a compliant solution because your average developer should not have any access to production data. If you’re using DynamoDB, it’s even worse because in the past there was no possibility of tracking the roles or identities which are doing changes on items in your tables. You could attach a lambda function via Streams to your tables with stream type NEW_AND_OLD_IMAGES, which gives you the option to track changes done to items, but it won’t forward the ARN of the IAM role or identity that initiated the changes.

CloudTrail for more visibility

Just recently — as of March 24, 2021 — AWS announced support for DynamoDB data events at CloudTrail. CloudTrail now supports logging of DynamoDB activity to S3 or to CloudWatch.

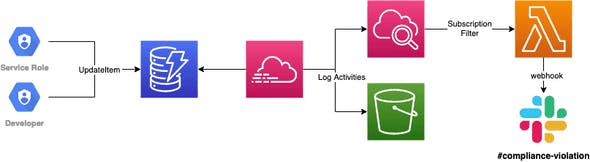

The steps are easy to follow:

Changes are made for DynamoDB items via

PutItemorUpdateItem.CloudTrail will log those changes to S3 as well as CloudWatch.

A Subscription filter on our CloudWatch log group will forward those logs to a Lambda function.

Lambda checks whether changes were initiated by an expected service role or by a developer.

Inside your Lambda function, you’ll receive the event from CloudTrail which looks like this (you need to decode it because it’s base64):

{

"eventVersion": "1.08",

"userIdentity": {

"type": "AssumedRole",

"principalId": "ARXXXXXXXXXXXXXXXXXX:max.mustermann@company.ag",

"arn": "arn:aws:sts::XXXXXXXXXXXX:assumed-role/myteam-administrators/max.mustermann@company.ag",

"accountId": "xxxxxxxxxx",

"accessKeyId": "xxxxxxxxxxxxx"

},

"eventTime": "2021-03-31T14:33:02Z",

"eventName": "PutItem",

"requestParameters": {

"tableName": "services_profile",

"key": {

"hashKey": "3d6e1b2fdf487316"

},

"items": ["name"]

},

"responseElements": null,

"readOnly": false,

"eventType": "AwsApiCall",

"apiVersion": "2012-08-10",

"managementEvent": false,

"recipientAccountId": "343224565791",

"eventCategory": "Data"

}

It’s not easy to identify the origin of the changes, which table item was changed, and which fields were updated. You can easily match for expected roles or identify and send out notifications, e.g. to your Slack channel via a webhook.

const util = require('util')

const zlib = require('zlib') // already provided by AWS!

const unzip = util.promisify(zlib.unzip)

module.exports.handler = async (event) => {

const data = (await unzip(Buffer.from(event.awslogs.data, 'base64')))

const trails = JSON.parse(data.toString()).logEvents.map(logEvent => JSON.parse(logEvent.message))

// filter out expected events through your backend service

const untrackedChanges = trails.filter(trail => !isKnown(trail))

// send out alerts!

await Promise.all(untrackedChanges.map(change => sendSlackAlert(change)))

}

Surely, if you have administrator rights, manual changes can be done without triggering alerts in a lot of different ways. This solution should just provide a hint for large teams on tracking unwanted changes done manually in their AWS account.