Step-By-Step: Emptying S3 Buckets and Directories Using the AWS CLI with S3 RM

The AWS S3 rm command is a versatile tool that allows you to remove objects such as single files, multiple files, folders, and files with a specific folder name prefix from an Amazon S3 bucket.

In this article, we will guide you through the process of using the AWS CLI to empty S3 buckets and directories step-by-step. We will also provide best practices for using the AWS S3 rm command to prevent unintentional data loss and protect against unintended file deletion.

Getting Started with the AWS CLI

💡 If you already have the AWS CLI installed on your computer, you can skip this section and proceed directly to the basics.

If you haven't already, make sure to install the AWS CLI and set up your credentials on your local machine. This is necessary to use the sync command. Please note that we will only be covering the minimum setup using an AWS Access Key ID and Secret Key, and won't be discussing AWS profiles or Multi-Factor Authentication usage.

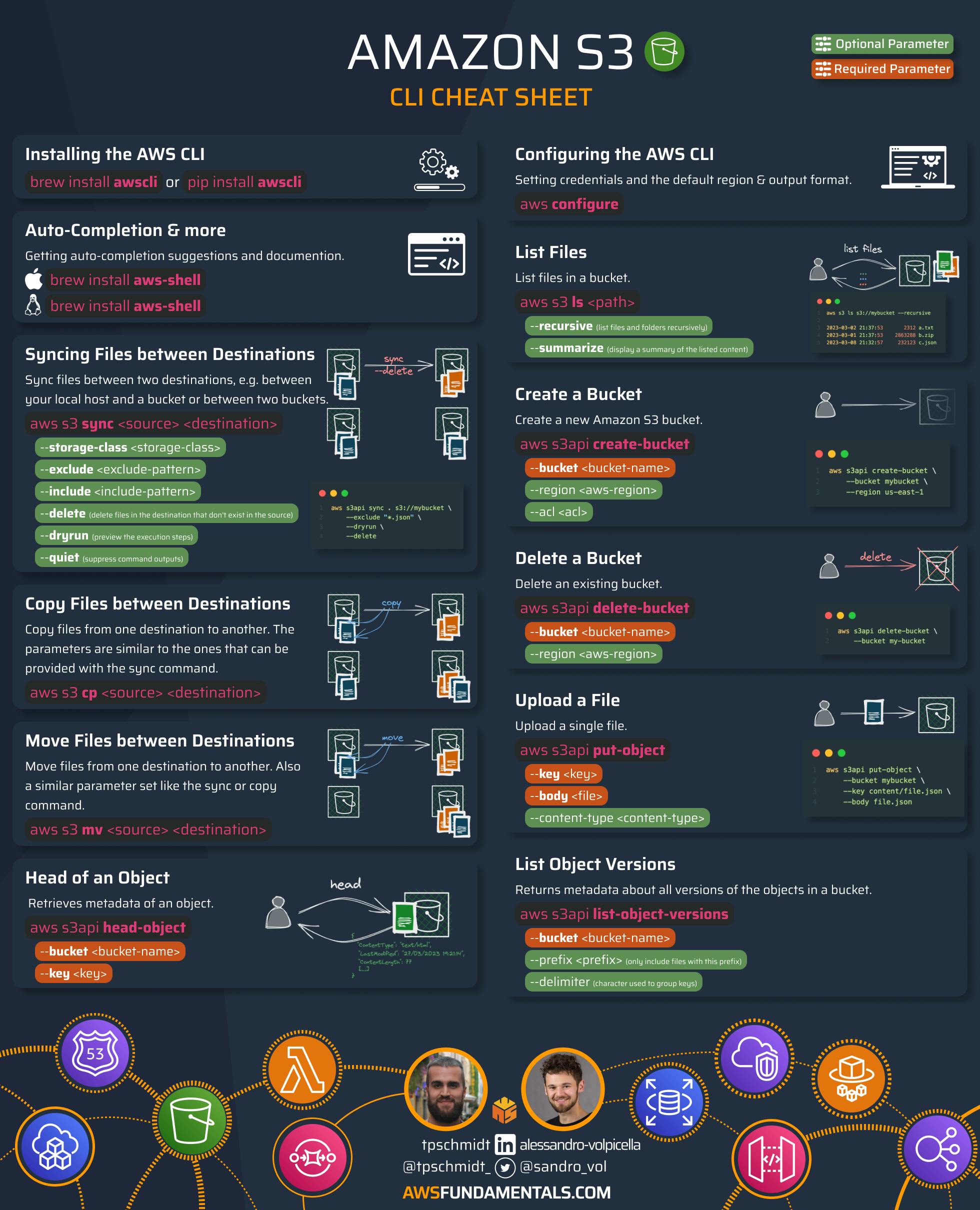

Installing the AWS Command Line Interface

To get the most recent AWS CLI version, follow your operating system's documentation for installation.

If you're running macOS, you can also just simply install the CLI via homebrew by running brew install awscli.

The AWS Command Line Interface is a comprehensive tool for programmatically managing your AWS resources. With it, you can easily manage any service from your terminal and automate processes using scripts.

⚠️ The AWS CLI should not be considered a replacement for an Infrastructure-as-Code tool. Although it is possible to automate resource creation, it does not provide a fixed state that accurately represents existing account resources. It is advised to limit the use of the CLI for minor tasks and avoid relying on it to construct the infrastructure of your application ecosystem. Instead, use specialized tools like Terraform, the AWS CDK, or CloudFormation. To learn more about the development of infrastructure tooling on AWS, do additional research.

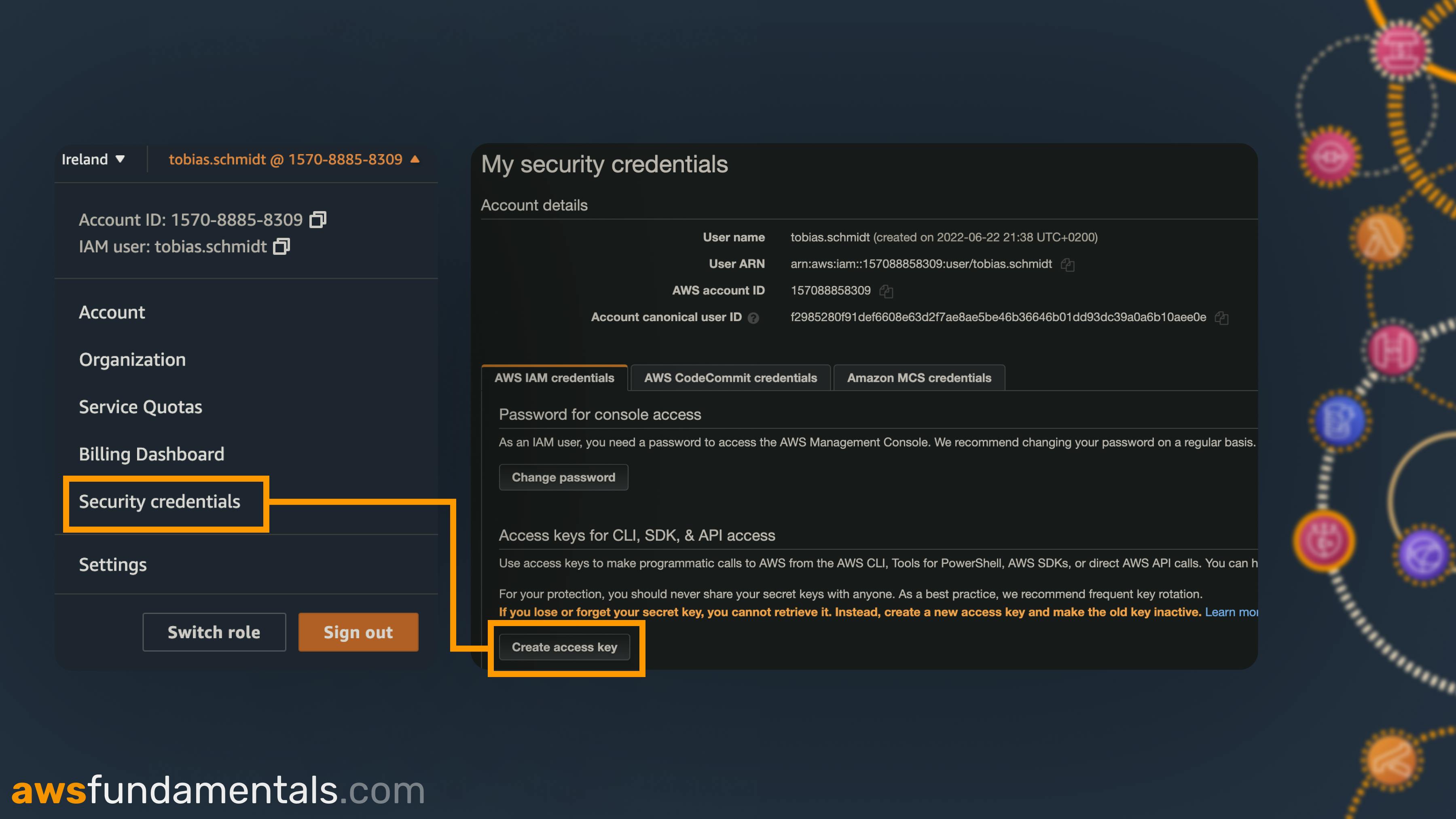

Configuration

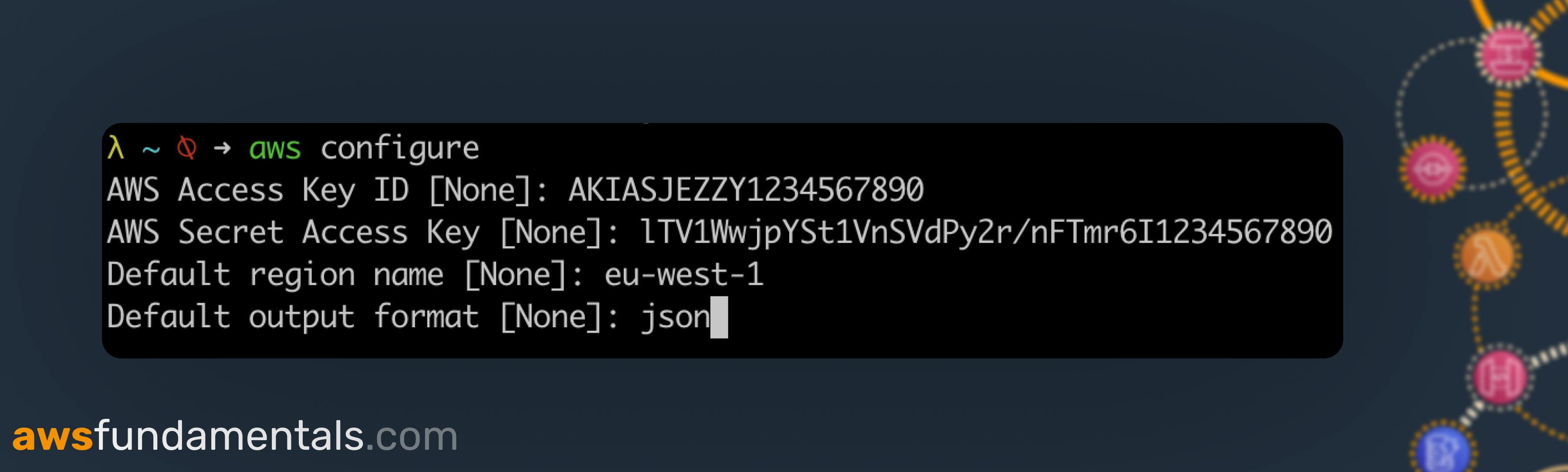

To access the AWS API using our recently installed tool, we must configure our account information. Navigate to your Security Credentials page.

Click Create access key to generate a fresh Access Key ID and Secret Access Key. Be sure to jot down both before exiting the creation window, as the Secret Access Key won't be recoverable later on!

Return to your terminal and execute aws configure then proceed to input your keys, default region (such as eu-west-1), and default output format (such as json) by following the prompts.

To confirm that everything is functioning as anticipated, let's execute aws sts get-caller-identity. If you don't encounter any errors and observe your distinct 12-digit account identifier, then we're good to go.

How to Use the AWS S3 rm

Removing a Single File

If you want to delete a specific file from an S3 bucket, you simply need to pass the full path to your file in your bucket.

aws s3 rm s3://<bucket-name>/<path-to-file>

# example

aws s3 rm s3://aws-fun-bucket/content/s3.pdf

This will remove the file named s3.pdf from the designated path within the aws-fun-bucket bucket.

Removing Multiple Files

To delete multiple files at once, all we have to do is add the --recursive flag.

aws s3 rm s3://<bucket-name>/<path> \

--exclude <exclusion-pattern> \

--include <inclusion-pattern>

--recursive

# example

aws s3 rm aws s3 rm s3://aws-fun-bucket/content \

--exclude "*" \

--include "*.pdf"

--recursive

Our command example will delete all PDF files, even those located in subfolders within the content directory. You might be curious about the syntax, but upon closer inspection, it becomes apparent why it is effective.

We're excluding all files by passing the wildcard pattern

*.We are exclusively incorporating files that meet our desired file extension.

Removing a Folder

Deleting a folder is as simple as the command we executed earlier. We just need to include the recursive flag and don't have to concern ourselves with any inclusion or exclusion patterns.

aws s3 rm s3://<bucket-name>/<path> \

--recursive

# example

aws s3 rm aws s3 rm s3://aws-fun-bucket/content --recursive

Contrary to our previous instruction, we will simply delete everything within the content folder and its subdirectories.

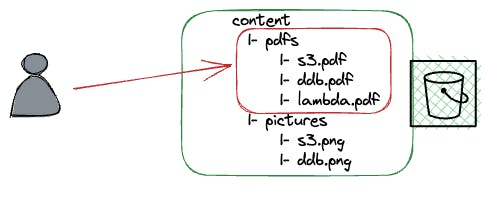

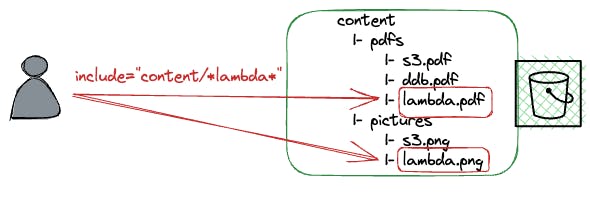

Removing Files with a Path Prefix

By utilizing the recursive flag and the include/exclude patterns, we can eliminate any files that contain a specific prefix in their file or path name.

aws s3 rm s3://aws-fun-bucket \

--exclude "*" \

--include "content/*.pdf" \

--recursive

In this example, we are only deleting PDF files from the content directory and not from any other location.

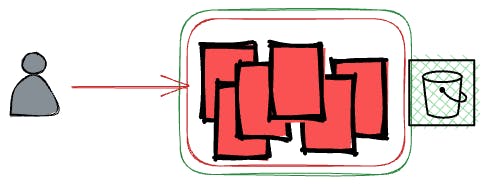

Emptying a Whole Bucket

To delete a bucket, it is necessary to empty it. One way to achieve this is by using the recursive flag on the root path.

aws s3 rm s3://<bucket-name> --recursive

Keep in mind that this method will only be effective if versioning has been turned off. However, if versioning is turned on, we must additionally erase the history of each file using the aws s3api delete-objects command.

# check if versioning is enabled

aws s3api get-bucket-versioning --bucket <bucket-name>

# retrieving a list of all files and all versions

FILES=$(aws s3api list-object-versions \

--bucket "<bucket-name>" \

--output=json \

--query="{ Objects: Versions[].{ Key:Key,VersionId:VersionId } }")

# submitting the Key/Version list to the delete command

aws s3api delete-objects --bucket <bucket-name> \

--delete "${FILES}"

With this feature, we can effortlessly empty the bucket, even if version control is turned on. However, it's important to exercise caution when using this command.

Best Practices for AWS S3 rm

When dealing with potent commands such as this, it's crucial to exercise caution since erased files are not readily recoverable.

How to Prevent Unintentional Loss of Data with the Dryrun Flag

The dry run feature lets you simulate the deletion process without actually deleting any data.

This preview allows you to see which objects are set to be deleted before you execute the command, helping you avoid any potential data loss.

aws s3 rm aws s3 rm s3://aws-fun-bucket/content \

--exclude "*" \

--include "content/*.pdf" \

--recursive \

--dryrun

To activate the feature, simply add the --dryrun option. This will generate a list of objects that would be deleted, but without actually deleting them. You can then review the list to ensure that no crucial data is accidentally deleted before executing the command without the flag.

How to Use Versioning to Protect against Unintended File Deletion

By default, the AWS S3 CLI removal command will only erase the most recent version of an object. This implies that if you have turned on versioning and have a file history, it's not possible to lose all previous versions easily.

It is advisable to enable versioning at all times for several reasons. By doing so, you can prevent data loss and comply with governance and compliance requirements. Additionally, you will be able to track when and how files were modified over time.

Conclusion

The AWS S3 rm command is a powerful tool for managing objects in an Amazon S3 bucket. With the help of the AWS CLI, you can easily remove single files, multiple files, folders, and files with specific folder name prefixes. However, it is important to follow best practices to prevent unintentional data loss and protect against unintended file deletion.

By using the dry run feature and versioning, you can ensure that your data is safe while performing necessary deletions.

Remember to use specialized tools like Terraform, the AWS CDK, CloudFormation, or Pulumi for constructing the infrastructure of your application ecosystem.

Frequently Asked Questions

What is the AWS CLI?

The AWS CLI is a comprehensive tool for programmatically managing AWS resources. With it, you can easily manage any service from your terminal and automate processes using scripts.What is the dry run feature?

The dry run feature is a feature of the AWS S3 rm command that lets you simulate the deletion process without actually deleting any data. This preview allows you to see which objects are set to be deleted before you execute the command, helping you avoid any potential data loss.Can I delete a whole bucket using the AWS S3 rm command?

Before deleting a bucket, it must be emptied first. One approach to accomplishing this is by utilizing the recursive flag on the root path. However, it's important to note that this technique will only remove the latest version if versioning is enabled. If you intend to erase all files in a versioned bucket, you must obtain a list of all files, inclusive of each version, and then provide it to the delete-object command.